Software delivery performance refers to how effectively a development team transforms ideas, requirements, and code into stable, working software that reaches users on time. It looks beyond simple deadlines and focuses on the flow of work across the entire delivery lifecycle, from planning and development to testing, deployment, and post-release stability. In nearshore setups, delivery performance reflects how well teams coordinate, adapt to changing priorities, and maintain momentum without compromising quality. By understanding software delivery performance and how to measure software delivery, businesses gain a clearer picture of how their development process actually functions in real-world conditions, rather than how it appears on project plans or timelines.

Measuring software delivery performance is essential because it turns day-to-day development activity into clear, decision-ready insight. In nearshore teams, where collaboration happens across borders but within similar time zones, performance metrics help ensure that speed, quality, and accountability stay aligned with business goals. Without clear measurement, it becomes difficult to know whether a team is truly delivering value or simply staying busy. Tracking delivery performance allows companies to spot bottlenecks early, understand how efficiently features move from planning to production, and maintain predictable release cycles. More importantly, it creates a shared language between stakeholders and nearshore teams, reducing assumptions and setting realistic expectations. When performance is measured consistently, nearshore partnerships become easier to manage, easier to scale, and far more reliable in supporting long-term product growth.

Software delivery performance describes how efficiently a development team converts requirements and ideas into reliable software that is delivered to users. It covers the full journey of work, starting from planning and development and moving through testing, deployment, and ongoing maintenance. In nearshore teams, delivery performance is especially important because it reflects how well distributed teams collaborate, communicate, and maintain consistency across locations. Strong delivery performance means features are released on time, quality remains stable, and teams can adapt quickly to change. Measuring this performance gives organizations visibility into how their delivery process truly functions beyond timelines and promises, allowing leaders to make informed decisions and continuously improve outcomes.

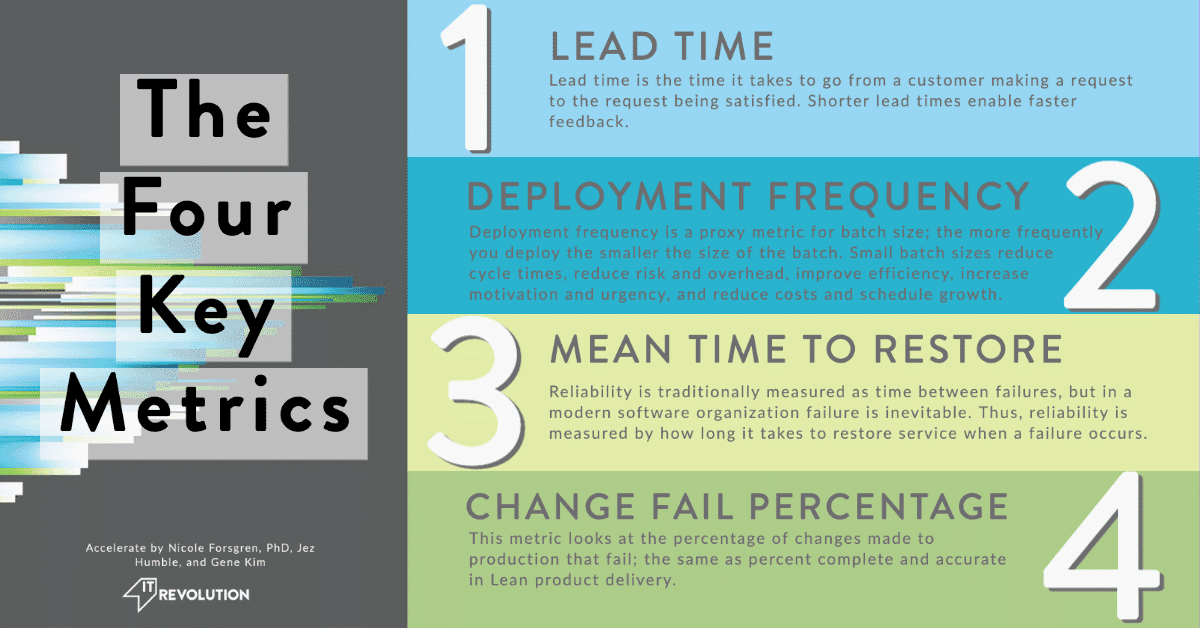

The most effective way to measure software delivery performance is by starting with a small set of proven metrics that reflect both speed and stability. Rather than tracking dozens of activity based indicators, high performing organizations focus on a few core measurements that reveal how work flows through the system. These metrics help teams understand how frequently software is delivered, how long it takes for changes to reach production, how often releases cause issues, and how quickly problems are resolved.

For nearshore teams, these measurements provide a shared understanding of performance across locations. They remove guesswork and assumptions by offering clear, consistent indicators that both technical teams and business stakeholders can rely on. When used together, these metrics create a balanced view of delivery health rather than focusing only on speed or only on quality.

Deployment frequency measures how often a nearshore team successfully releases software into production. This can be tracked daily, weekly, or monthly depending on the product and release strategy. Higher deployment frequency generally indicates a smoother delivery pipeline, smaller and safer changes, and stronger automation practices.

In nearshore environments, deployment frequency also reflects how well teams coordinate across borders. Regular releases show that reviews, approvals, and testing are happening without unnecessary delays. When deployments are infrequent, it often signals bottlenecks such as manual processes, unclear ownership, or slow decision making. By tracking deployment frequency over time, organizations can identify whether their delivery flow is improving or becoming more restrictive.

Lead time for changes measures the total time it takes for a piece of work to move from development to production. This metric begins when work starts, such as when code is committed or a task is picked up, and ends when the change is live and usable. Shorter lead times indicate efficient workflows, while longer lead times often highlight delays within the delivery pipeline. For nearshore teams, lead time is particularly useful for identifying hidden friction. Delays may occur during code reviews, testing phases, or handoffs between teams in different locations. Measuring lead time helps leaders pinpoint where work slows down and why. Once these problem areas are visible, teams can improve tooling, refine processes, or adjust collaboration methods to keep work moving smoothly.

Change failure rate measures how often deployments result in issues that require fixes, rollbacks, or emergency interventions. This metric is critical because it directly reflects the stability and reliability of the software being delivered. A high failure rate suggests gaps in testing, unclear requirements, or rushed releases, while a low failure rate indicates strong quality practices. In nearshore teams, this measurement also reflects alignment around quality standards. Teams working from different locations must share the same understanding of what a successful release looks like. Tracking change failure rate encourages teams to invest in automated testing, clearer acceptance criteria, and better validation before deployment. Over time, reducing failure rates leads to fewer disruptions and higher confidence in the delivery process.

Time to restore service measures how quickly a team can recover when something goes wrong in production. No delivery process is perfect, and incidents are inevitable. What matters most is how efficiently teams respond and resolve issues. This metric captures the time between detecting a problem and fully restoring normal service.

For nearshore teams, fast recovery depends on clear communication channels, shared incident response processes, and access to the right tools and documentation. Measuring restoration time helps organizations understand whether their teams are prepared to handle disruptions. Improvements in this area often come from better monitoring, clearer escalation paths, and well defined responsibilities during incidents.

While delivery metrics provide deep insight into engineering performance, they become even more valuable when connected to business outcomes. Software delivery performance should support goals such as faster time to market, improved customer satisfaction, and higher product adoption. Measuring how delivery speed and stability affect these outcomes helps leaders understand the real value of their nearshore teams.

For example, consistent and predictable delivery can enable faster feature launches, while stable releases reduce customer complaints and support costs. By linking delivery performance to business results, organizations ensure that technical improvements align with strategic priorities rather than existing in isolation.

Numbers alone do not tell the full story of delivery performance. Team health, communication quality, and collaboration effectiveness play a major role in long term success. Nearshore teams that communicate openly, share feedback, and feel supported tend to perform better over time. While these factors are harder to measure, regular feedback sessions and retrospectives help surface issues early. Including qualitative insights alongside delivery metrics creates a more complete performance picture. This approach ensures that improvements are sustainable and that teams are not sacrificing well being or collaboration in the pursuit of speed.

Measuring software delivery performance is not a one time activity. It is an ongoing process that supports continuous improvement. Teams should establish baseline measurements, review trends regularly, and set realistic improvement goals. Dashboards and automated tracking tools can help maintain visibility without adding reporting overhead. Most importantly, metrics should be used as learning tools rather than evaluation weapons. When teams understand why metrics are tracked and how they help improve outcomes, they are more likely to engage with the data and take ownership of improvements. Over time, this creates a culture of transparency, accountability, and steady progress across nearshore delivery teams.

From Blue Coding's point of view, software delivery performance is the difference between teams that react and teams that lead. That is why we help our partners look beyond deadlines and focus on how work truly moves from idea to production. By combining nearshore expertise with proven delivery practices, we enable teams to release with confidence, adapt faster to change, and maintain quality at every stage of development. For us, performance measurement is not about pressure or control, it is about visibility, continuous improvement, and building software that stands up to real world demands. If you are ready to work with a nearshore partner that treats delivery performance as a strategic advantage, contact Blue Coding and start building software that moves your business forward. We do offer complimentary strategy calls if you are interested!

Subscribe to our blog and get the latest articles, insights, and industry updates delivered straight to your inbox